Alter Snowflake with Dynamic SQL and Python

Adding Python to Alteryx can make your Snowflake dreams come true

Enter the python tool

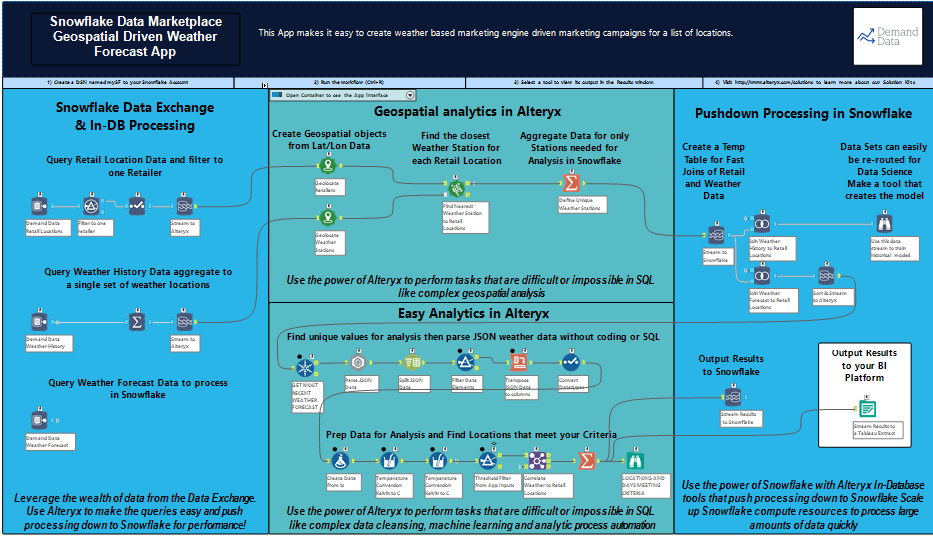

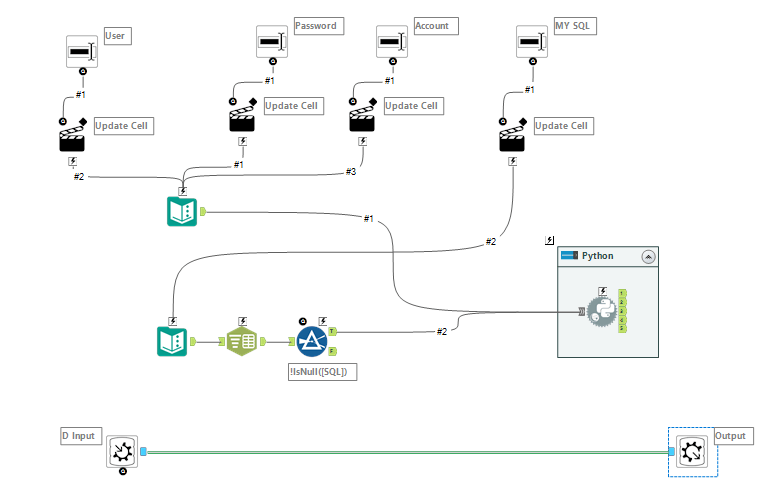

Now, I am more business and less code. I muddle through code when I have to, and I finally decided this was something I had to do. I had to be able to use a mix of custom SQL with the native in-db tools on Snowflake. I was pleasantly surprised that this was WAY easier than I expected with building some custom macros that take advantage of the Snowflake -Python connectors.

To Start with, the newer versions of Alteryx with integrated jupyter notebooks help a lot!

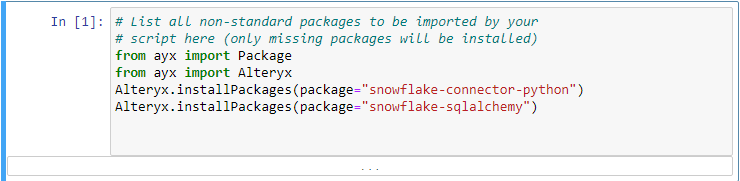

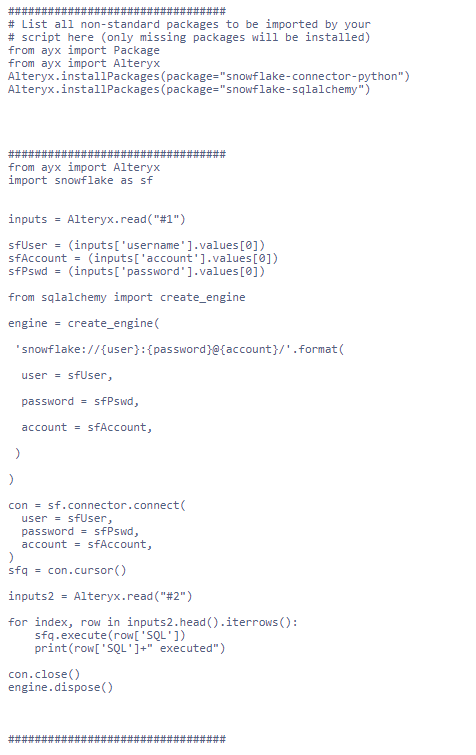

When you drop a Python tool on to the Alteryx canvas, this notebook will open up. You can enter in your code (or for us non-coders - find the code on stack overflow and paste it in :))

This code loads the packages that are required to execute code

against Snowflake. As a note, you may get an error when loading these. If you do, try to run Alteryx as an administrator on your machine. You also may get success by adding

install_type=User

to your command (package = "snowflake-sqlalchemy", install_type=User

).

Right clicking on Alteryx designer and running as administrator is what worked for me.

from ayx import Package

from ayx import Alteryx

Alteryx.installPackages(package="snowflake-connector-python")

Alteryx.installPackages(package="snowflake-sqlalchemy")

I am using the standard code samples from snowflake on how to set up your connections; With one little trick :).

As your see, we are creating a variable object, inputs, which will read the data stream #1 that I drag into my input tool. This data stream will contain the basic information about the Snowflake account. We can set these values using interface tools as part of a macro or analytic app.

The code sfUser = (inputs['username'].values[0]) sets that variable to the first row of the column 'username'.

The rest below is just the standard Snowflake connector syntax. You will know it is working as we have requested Snowflake to send the current version (which you will see in your Python tool or in your Alteryx messages when you run this in a workflow.)

from ayx import Alteryx

import snowflake as sf

inputs = Alteryx.read("#1")

sfUser = (inputs['username'].values[0])

sfAccount = (inputs['account'].values[0])

sfPswd = (inputs['password'].values[0])

from sqlalchemy import create_engine

engine = create_engine(

'snowflake://{user}:{password}@{account}/'.format(

user = sfUser,

password = sfPswd,

account = sfAccount,

)

)

con = sf.connector.connect(

user = sfUser,

password = sfPswd,

account = sfAccount,

)

sfq = con.cursor()

inputs2 = Alteryx.read("#2")

for index, row in inputs2.head().iterrows():

sfq.execute(row['SQL'])

print(row['SQL']+" executed")

con.close()

engine.dispose()

Now for the dynamic part

What we are doing here is creating a second box for the SQL script we want to run.

The command sfg.execute('WILL EXECUTE THIS SQL SCRIPT'). This problem with this is it only accepts one statement at a time.

That said, we can read in multiple SQL statements and use a very simple loop; to loop through each statement and execute it.

inputs2 = Alteryx.read("#2")

for index, row in inputs2.head().iterrows():

sfq.execute(row['SQL'])

print(row['SQL']+" executed")

con.close()

engine.dispose()

This means we can input individual SQL statements and execute them in an Alteryx workflow

Thats a wrap!

Now, it's just a question of connecting a few interface tools. I am splitting the SQL statement into rows using a semicolon to mirror the effect of writing out a long SQL statement. The input and output tools on the bottom are really just there to give this macro a seamless in-db feel. We could very easily tweak the Python macro to return a Snowflake select statement as a data frame and have an output from the macro as well. However, the purpose of this macro is to automate some administrative tasks in a workflow. I can now have complete command of my Snowflake environment from within Alteryx, and I can easily build up a suite of macros to deal with common tasks or execute anything remotely in Snowflake from Alteryx!

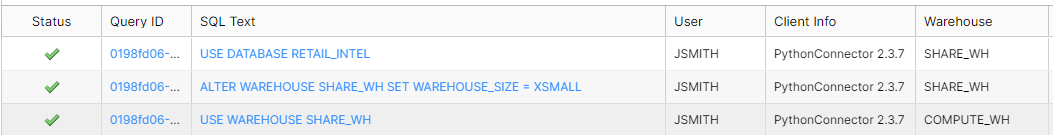

Now in Action!

Full code below: